Data engineering is the foundation; insights are the elevation

Building the bridges that turn data into insights – that’s the art of data engineering

Data engineering is fundamental for businesses seeking to collect, process, and leverage data effectively. Our data engineering services are designed to help you build a robust data infrastructure that supports data-driven decision-making, analytics, and innovation.

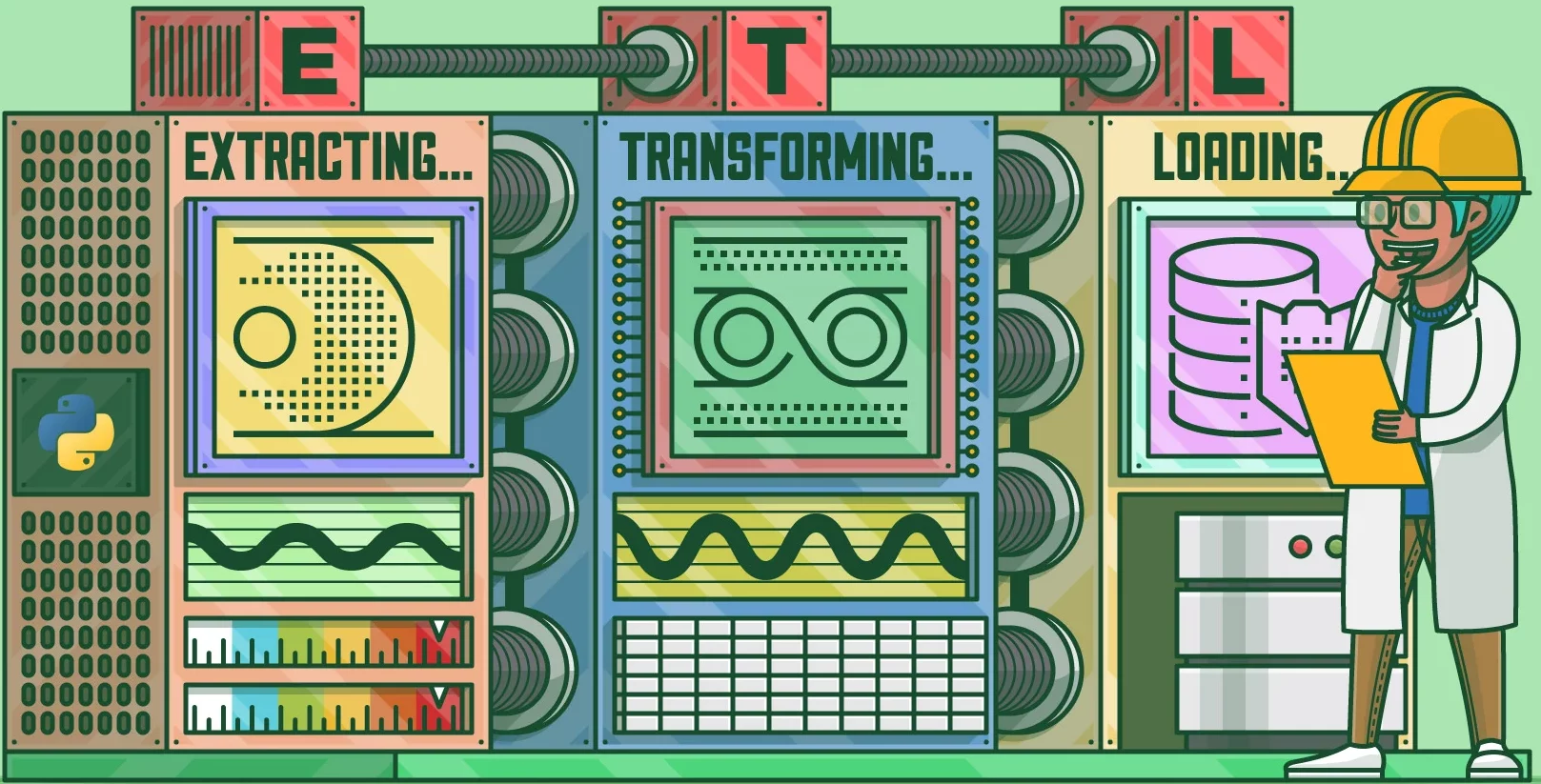

Data Engineering services encompass a range of critical data-related functions. We excel in data integration, making it easy for you to collect and unify data from various sources. Our experts design and maintain data warehouses for efficient data storage and retrieval, and we develop ETL (Extract, Transform, Load) processes to ensure your data is structured and ready for analysis.

We specialize in building data pipelines for automated data movement and transformation, enabling real-time or batch processing. If you’re dealing with vast data volumes, our Big Data processing capabilities, including technologies like Hadoop and Apache Spark, allow you to analyze large datasets efficiently.

Real-time data streaming is a focus, enabling you to respond instantly to changing data and events. We also prioritize data quality, implementing data cleansing and quality assurance measures. Data governance practices are crucial to us, ensuring your data remains secure, compliant, and accessible.

Customized solutions are our strength, as we design and develop data engineering solutions to meet your unique business needs. Our consulting services help you plan your data strategy, and our training programs ensure your team can effectively manage and maintain data engineering solutions. With our Data Engineering services, you can harness the power of data for informed decision-making and operational efficiency.

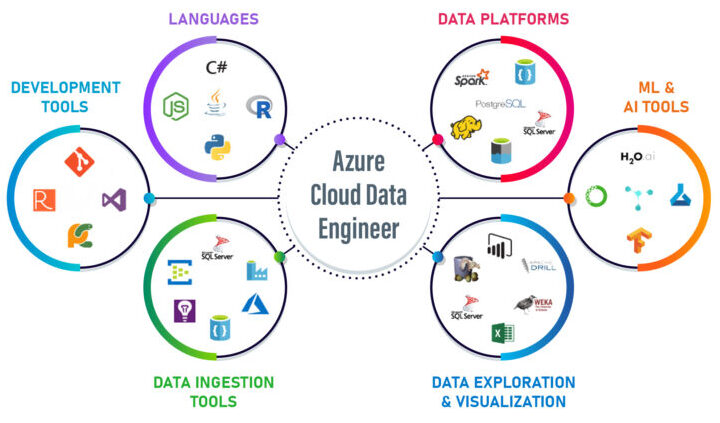

Azure Data Engineering: Where cloud meets data, innovation follows

Embark on a data transformation journey with our Azure Data Engineering services, seamlessly integrating Azure Data Factory (ADF), Azure Databricks, Azure Synapse Analytics, and Power BI into a cohesive ecosystem. Azure Data Factory orchestrates efficient and scalable data workflows, ensuring the seamless movement and transformation of data across your organization. With Azure Databricks, we unlock the power of advanced analytics and machine learning, processing large datasets to extract valuable insights. Azure Synapse Analytics, combined with Azure Data Factory, creates a comprehensive data warehouse solution for unmatched performance and analytics. Finally, Power BI crowns the system, providing interactive and visually compelling dashboards to make data-driven decisions with ease. Our Azure Data Engineering services bring together the best of Microsoft’s cloud technologies, empowering your organization to derive actionable insights, enhance productivity, and navigate the data landscape with confidence.

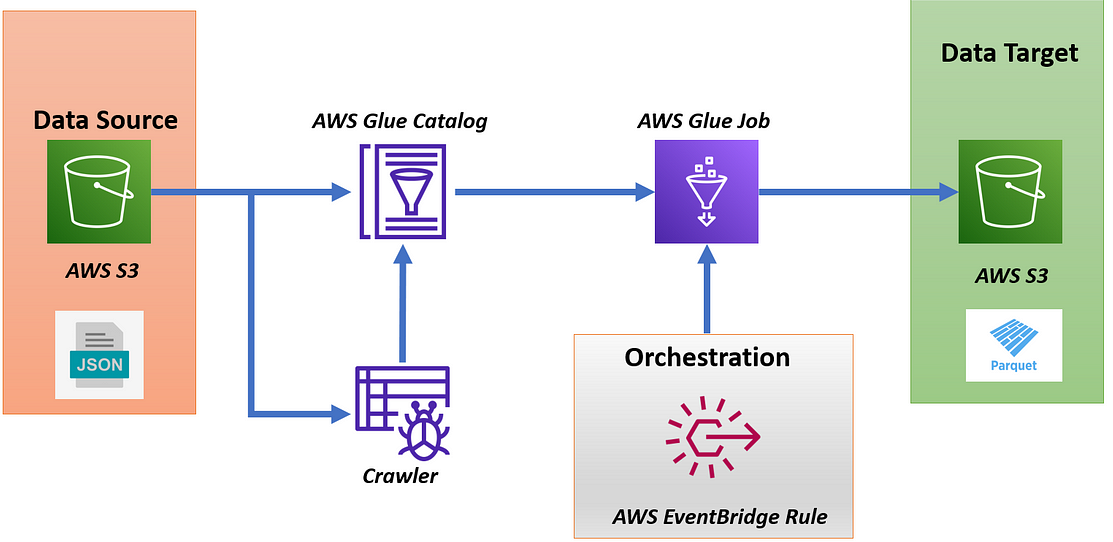

In the AWS cloud, our data engineers sculpt possibilities, turning data into innovation

Unlock the full potential of your data landscape with our AWS Data Engineering services, seamlessly integrating a suite of powerful AWS data tools. Leveraging AWS Glue, we automate complex ETL processes, ensuring a streamlined flow of data across your organization. AWS Redshift, our high-performance data warehouse, serves as the backbone for advanced analytics, providing rapid insights for informed decision-making. Amazon S3 establishes scalable and secure data lakes, accommodating the dynamic needs of your growing datasets. AWS Lambda introduces serverless data processing, enhancing flexibility and cost efficiency in your workflows. With Amazon EMR handling large-scale big data processing and AWS Data Pipeline orchestrating intricate workflows, our AWS Data Engineering services empower your organization to harness the agility, scalability, and innovation of AWS data tools, guiding you towards unparalleled success in the cloud-driven era of data excellence.

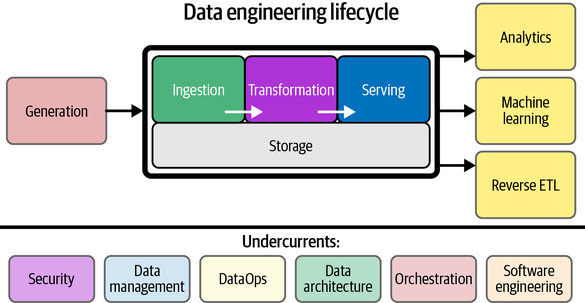

Navigating the Data Engineering Lifecycle: From Ingestion to Insightful Delivery

These steps collectively form a continuous cycle as data engineering is an iterative process that adapts to the evolving needs of an organization’s data ecosystem.

Data Ingestion

In the initial phase, data engineers focus on collecting and ingesting raw data from various sources into a centralized system. This involves extracting data from databases, logs, APIs, or other repositories and bringing it into a format suitable for processing and analysis.

Data Processing and Transformation

Once the data is ingested, the next step is processing and transforming it to meet the required format and structure. This often involves cleaning, aggregating, and enriching the data. Tools like Apache Spark, AWS Glue, or Azure Data Factory are commonly used for these tasks to ensure the data is prepared for analysis.

Data Storage

After processing, the data needs to be stored efficiently for easy retrieval and analysis. Data engineers choose appropriate storage solutions such as data warehouses, data lakes, or NoSQL databases based on the specific needs of the organization. Popular platforms for this step include AWS S3, Azure Data Lake Storage, and Google Cloud Storage.

Data Delivery

The final step involves delivering the processed and transformed data to end-users or downstream systems. Data engineers design pipelines to facilitate the movement of data to visualization tools, business intelligence platforms, or other applications where stakeholders can access and derive insights from the structured and organized data.